Website Crawling: How Web Crawling Works

- The technique used by search engines to crawl the web and gather information from many websites to create an index is called crawling.

- 1) What is Crawling?

- 2) Why is Crawling Important for SEO?

- 3) How Crawling Works

- 4) Crawling and Website Architecture.

- 5) Common Crawling Issues

- 6) Search Engine Support For Crawling

The main goals of search engine optimisation are to increase your website's visibility to search engines and organic traffic. Crawling, or the process by which search engines index and comprehend the material on your website, is one of the most crucial components of SEO. We'll cover the basics of crawling for SEO in this post, along with tips for optimising your website.

If your content is not being crawled, you have no chance to gain visibility on Google surfaces.

What is Crawling?

In the context of SEO, crawling is the process in which search engine bots (also known as web crawlers or spiders) systematically discover content on a website.

Search engines use a method called crawling to search the internet and gather information from various websites to create an index. The computer programs known as crawlers, sometimes referred to as spiders or bots, employ algorithms to follow links on websites and collect data about their structure, content, and other pertinent aspects. After that, the crawlers save this data in the search engine's database, which is used to rank and identify which websites are relevant to a user's query.

Why is Crawling Important for SEO?

Because it enables search engines to comprehend the material on your website and rank it according to relevancy and quality, crawling is essential for search engine optimisation. Your website will not show up in search engine results pages (SERPs) if it is not crawled, costing you precious organic visitors. Furthermore, even if your website gets crawled, it might not be correctly indexed, which would lead to low exposure and ranks.

How Crawling Works

A web crawler works by discovering URLs and downloading the page content. During this process, they may pass the content over to the search engine index and extract links to other web pages.

Search engines begin crawling when they find new web pages via sitemaps, submission forms, or links from other websites. The search engine uses a crawler to visit a page once it has been found and gather information about its structure, content, and other pertinent elements. The crawler then continues the process, exploring new pages via the links on the page.

- New URLs that the search engine has never seen before.

- Known URLs without any crawling instructions will be checked regularly to see whether the content of the page has changed and the search engine index needs to be updated.

- Well-known URLs that provide precise instructions and have been updated. They ought to be recrawled and reindexed, maybe using an XML sitemap with a time stamp for the last mod date.

- URLs that are inaccessible and should not be followed, such as those protected by a "nofollow" robots tag or those that are behind a log-in form.

All allowed URLs will be added to a list of pages to be visited in the future, known as the crawl queue.

Crawling and Website Architecture

Website architecture refers to the structure and organization of your website's content and pages. A well-organized website with clear navigation and internal linking makes it easier for search engines to crawl and understand your content. On the other hand, a poorly structured website with broken links, duplicate content, or missing pages can make it difficult for crawlers to index your site, resulting in poor rankings and low traffic.

Common Crawling Issues

Several common issues can impact crawling and indexing, including:

Duplicate content: You risk confusing search engines and losing ground in the results if you have the same information on different pages.

Broken links: When links are broken, crawlers may not be able to reach crucial pages on your website.

Text file known as "robos.txt": It instructs search engine crawlers as to which pages or areas of your website should not be crawled. But if it's not set up properly, it may unintentionally prevent the crawling of crucial pages.

Redirects can let people go from outdated pages to more recent ones, but if they're done incorrectly, they might confuse crawlers and affect indexing.

Search Engine Support For Crawling

In recent years, there has been much discussion on search engines and their partners' efforts to enhance crawling.

Most of the talk has been around two APIs that are aimed at optimizing crawling.

The concept is that websites can use the API to push relevant URLs directly to search engines, causing a crawl, instead of relying on search engine spiders to choose what to crawl.

Theoretically, this not only gives you a faster indexation speed for your most recent content, but it also provides a way to efficiently get rid of outdated URLs—something that search engines do not currently handle very well.

Conclusion

To sum up, crawling is a crucial procedure that aids search engines in comprehending the content of your website and appropriately indexing it. Your website might not show up in search engine results if adequate crawling isn't done, which would reduce traffic and lead generation. You may make sure that search engines can access and comprehend your material by optimising your website for crawling. This can raise your website's rankings, get more visitors, and eventually improve sales. Keep in mind that optimising for crawling is a continuous activity that needs continuous care and observation. You can make sure that your website meets your SEO objectives and remains competitive in search engine rankings by keeping up with the most recent best practices.

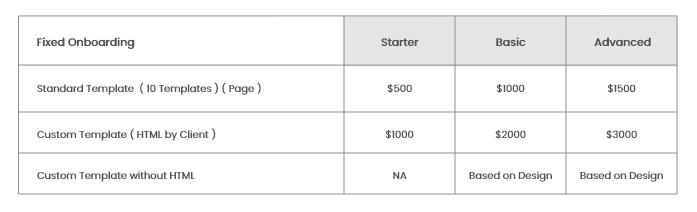

Hocalwire CMS handles the technical parts of keeping Large Sitemap, Indexing pages for Google, Optimizing page load times, Maintaining assets and file systems, and Warning for broken links and pages while you handle all these non-technical components of SEO for Enterprise sites. If you're searching for an enterprise-grade content management system, these are significant value adds. To learn more, Get a Free Demo of Hocalwire CMS.