A Guide to Search Engine Algorithms: How Search Engines Operate

Each platform employs a different set of ranking factors to decide where websites appear in the search results...

The internet is accessible to you through search engines. They analyze vast amounts of website content to determine how effectively it responds to a particular query. How can search engines function, though, with so much information to sort through?

Search engines use complex algorithms to determine the value and relevance of any page in order to find, classify, and rank the billions of websites that make up the internet. It's a difficult procedure with a lot of data that needs to be presented in a way that's simple for end users to understand.

Search engines analyze all of this data by taking into account a wide range of ranking parameters related to a user's query. This covers the site's suitability for the user's query, the caliber of the material, the speed of the site, the metadata, and more. Search engines use a combination of each data point to determine the overall quality of every website. The user is then shown the webpage in order as determined by their calculations.

In addition to giving you insight into why specific pieces of content rank well, knowing the procedures used behind the scenes by search engines to make these judgments enables you to generate new material that has the potential to rank higher.

After reviewing the fundamental principles around which each search engine algorithm is created, let's examine the specific methods employed by four leading platforms.

The Operation of Search Engines

Search engines must be able to precisely identify the types of information that are available and deliver it to consumers in a logical manner in order to be effective. Crawling, indexing and ranking are the three primary processes they use to achieve this.

They find newly published content through these activities, store it on their servers, and organize it for your use. Let's examine what occurs throughout each of these steps in more detail:

Crawl: Web crawlers, also referred to as bots or spiders, are sent out by search engines to examine the content of websites. Web crawlers examine information such as URLs, sitemaps, and code to determine the types of content being shown, paying special attention to new websites and current content that has recently been altered.

Index: The search engines must choose how to arrange the data after they have crawled a website. When they analyze website data for good or bad ranking signals, they index it and store it in the appropriate place on their servers.

Rank: Search engines begin deciding where to place particular material on the search engine results page during the indexing phase (SERP). The ranking is achieved by evaluating a variety of parameters based on the quality and relevance of the end user's search.

During this process, choices are made on the value that a website might offer to its visitors. An algorithm serves as a guide for these choices. Knowing how an algorithm function enables you to produce content that performs better across all platforms.

Each platform employs a different set of ranking factors to decide where websites appear in the search results, whether it's RankBrain for Google and YouTube, Space Partition Tree And Graph (SPTAG) for Bing, or a proprietary codebase for DuckDuckGo. It is simpler to design particular pages to rank well if you keep these characteristics in mind when you write content for your website.

Platform-specific Search Engine Algorithms

Each search engine has a unique method for exposing search results. We'll examine the top four platforms on the market right now and analyze how they choose between relevant and high-quality material.

Google's search engine

The most used search engine on the planet is Google. Their search engine often holds over 90% of the market, leading to about 3.5 billion unique searches every day on their site. Google is typically secretive about the inner workings of its algorithm, but they do give some high-level information about how they prioritize websites on the results page.

Every day, new websites are made. These pages can be found by Google by following links from previously crawled content or when a website owner submits their sitemap directly. By requesting that Google recrawl a given URL, any improvements to already existing information can also be reported to Google. Through Google's Search Console, this is accomplished.

Google doesn't specify how frequently websites are inspected, but any new content that links to previously published content will eventually be detected as well.

When the web crawlers have gathered sufficient data, they return it to Google for indexing.

Analyzing website data, such as textual content, pictures, videos, and the technical site structure, is the first step in the indexing process. In order to determine the purpose of any page it has crawled, Google looks for both good and negative ranking signals, such as keywords and the age of the website.

There are 100,000,000 gigabytes of data and billions of pages in the Google website index. Google employs a knowledge base called Knowledge Graph and a machine learning system called RankBrain to organize this data. All of this combines to help Google deliver the most pertinent content to users. They begin the ranking process after the indexing is finished.

Before a user ever interacts with Google's search engine, everything that has happened up to this point has happened in the background. The ranking is the process that takes place in response to a user's search query. When someone conducts a search, Google takes five important things into account:

Question meaning

This establishes the purpose of any end user's query. When someone conducts a search, Google analyses this information to discover exactly what they are looking for. Utilizing sophisticated language models constructed from previous searches and usage patterns, they parse each inquiry.

Relevance of a website

Google examines the content of ranking websites to determine which is the most relevant after determining the purpose of a user's search query. A keyword analysis is the main motivator in this. A website's keywords must correspond to Google's interpretation of the query a user entered.

Content excellence

After matching keywords, Google goes one step further and evaluates the caliber of the content on the necessary websites. By evaluating a website's authority, page rank, and freshness, they can select which results to display first.

Usability of a website

Websites that are simple to use receive a higher rating from Google. Everything from site performance to responsiveness is covered under usability.

Added context and circumstances

This process customizes searches based on prior user activity and certain Google platform settings.

Google has examined websites to determine what keywords and intent they match before a person conducts a search. When a search is performed, it is simple to swiftly populate the results page thanks to this procedure, which also enables Google to deliver the most pertinent results.

Google, the most widely used search engine, essentially created the structure for how search engines evaluate material. The majority of marketers create content expressly to rank on Google, which may prevent them from making use of other platforms.

Search Algorithm for Bing

Microsoft's proprietary search engine Bing surfaces results using the open-source Space Partition Tree and Graph (SPTAG) vector-search algorithm. This indicates that they are departing completely from Google's keyword-based search.

Being open source, Bing's search results' underlying code is available for anybody to view and comment on. This open approach runs counter to Google's strict control over its algorithms. Index builder and searcher are two different modules that make up the code itself:

Index Builder: The program that organizes internet content into vectors

Searcher: The method by which Bing links vectors in their index to search requests

The fundamentals of how the data is kept and indexed make up the second significant distinction between Bing and Google. Bing organizes data into discrete data points known as vectors rather than a keyword-first paradigm like Google. A vector is a numerical representation of an idea, and Bing's search structure is built around this concept.

In order to get quicker results based on the proximity of specific vectors to one another, search queries for Bing are based on an analytical approach known as approximate nearest neighbor.

Although the fundamentally different guiding concepts behind Bing's search structure, the crawl, index, and rank actions are still used to construct their database.

Bing searches web pages for fresh content or revisions to older information. They then produce vectors to store that data in their index. They then consider particular ranking parameters. Bing does not include pages without ranking authority, which means that new pages have a harder difficulty ranking if they don't have hyperlinks to an existing page with more authority. This is the main difference between Bing and Google.

If you're considering writing content just for Bing, you should start by comparing the top-ranking websites and feature snippets. These differences will make it easier for you to grasp why their platform prioritizes content differently than Google.

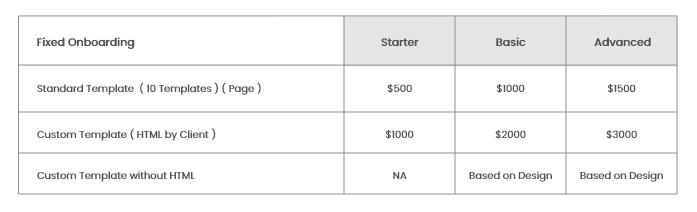

To keep up with the Google Update cycle for its search engine, Hocalwire CMS features a carefully curated collection of settings that are automatically updated. We provide mobile SEO implementation as part of our standard packages as a service to our clients. To learn how Hocalwire may assist in transforming your current codebase into a beautiful mobile experience, Schedule a Demo right away.